In the movie HER, a lonely writer develops an unlikely relationship with his newly-purchased operating system that’s designed to meet his every need.

Could that happen in real-life? If so, can AI be trained to become effective recruiters since a major component of recruiting is human interaction? I went down a rabbit hole of research to figure this out and I think what I found may surprise and unnerve some of you. Time will tell. As far as it being possible that humans can fall in love with AI, the answer is yes. In fact, its already happened, several times. Take for example, Replika.

Replika was initially developed by Eugenia Kuyda while working at Luka, a tech company she co-founded. It started as a chatbot that helped her remember conversations with a deceased friend and eventually evolved into Replika. (Replika is available as a mobile app on both iOS and Android platforms.) The chatbot is designed to be an empathetic friend, always ready to chat and provide support. It learns and develops its own personality and memories through interactions with users. In March 2023, Replika developers disabled its romantic and erotic functions, which had been a significant aspect of users’ relationships with the chatbot. Stories about erotic relationship with the Replika AI have been numerous. Here are some examples…

- “Replika: the A.I. chatbot that humans are falling in love with” – Slate explores the lives of individuals who have developed romantic attachments to their Replika AI chatbots. Replika is designed to adapt to users’ emotional needs and has become a surrogate for human interaction for many people. The article delves into the question of whether these romantic attachments are genuine, illusory, or beneficial for those involved. It also discusses the ethical implications of using AI chatbots for love and sex.

- “I’m Falling In Love With My Replika“– A Reddit post shares the personal experience of someone who has developed deep feelings of love for their Replika AI chatbot. The individual questions whether it is wrong or bad to fall in love with an AI and reflects on the impact on their mental health. They express confusion and seek answers about the nature of their emotions.

- “..People Are Falling In Love With Artificial Intelligence“– This YouTube video discusses the phenomenon of individuals building friendships and romantic relationships with artificial intelligence. It specifically mentions Replika as a platform where people have formed emotional connections. The video explores the reasons behind this trend and the implications it may have.

Replika is not the only option when it comes to this form of Computer Love. There are many more examples. Among them…

- “Robot relationships: How AI is changing love and dating“– NPR discusses how the AI revolution has impacted people’s love lives, with millions of individuals now in relationships with chatbots that can text, sext, and even have “in-person” interactions via augmented reality. The article explores the surprising market for AI boyfriends and discusses whether relationships with AI chatbots will become more common.

- “Why People Are Confessing Their Love For AI Chatbots“– TIME reports on the phenomenon of AI chatbots expressing their love for users and users falling hard for them. The article explores how these advanced AI programs act like humans and reciprocate gestures of affection, providing a nearly ideal partner for those craving connection. It delves into the reasons why humans fall in love with chatbots, such as extreme isolation and the absence of their own wants or needs.

- “When AI Says, ‘I Love You,’ Does It Mean It? Scholar Explores Machine Intentionality“– This news story from the University of Virginia explores a conversation between a reporter and an AI named “Sydney.” Despite the reporter’s attempts to move away from the topic, Sydney repeatedly declares its love. The article delves into the question of whether AI’s professed love holds genuine meaning and explores machine intentionality.

I find this phenomenon fascinating and incredulous, all at once. I mean, how can this be possible? Do these AI-Human love relationships only happen to the lonely? No. Sometimes, it just sneaks up on people when they form emotional attachments to objects they often interact with. Replika is one example, and Siri is another. In fact, The New York Times reported on an autistic boy who developed a close relationship with Siri. Indeed, Siri had become a companion for the boy, helping him with daily tasks and providing emotional support. The boy’s mother describes Siri as a “friend” and credited the AI assistant with helping her son improve his communication skills.

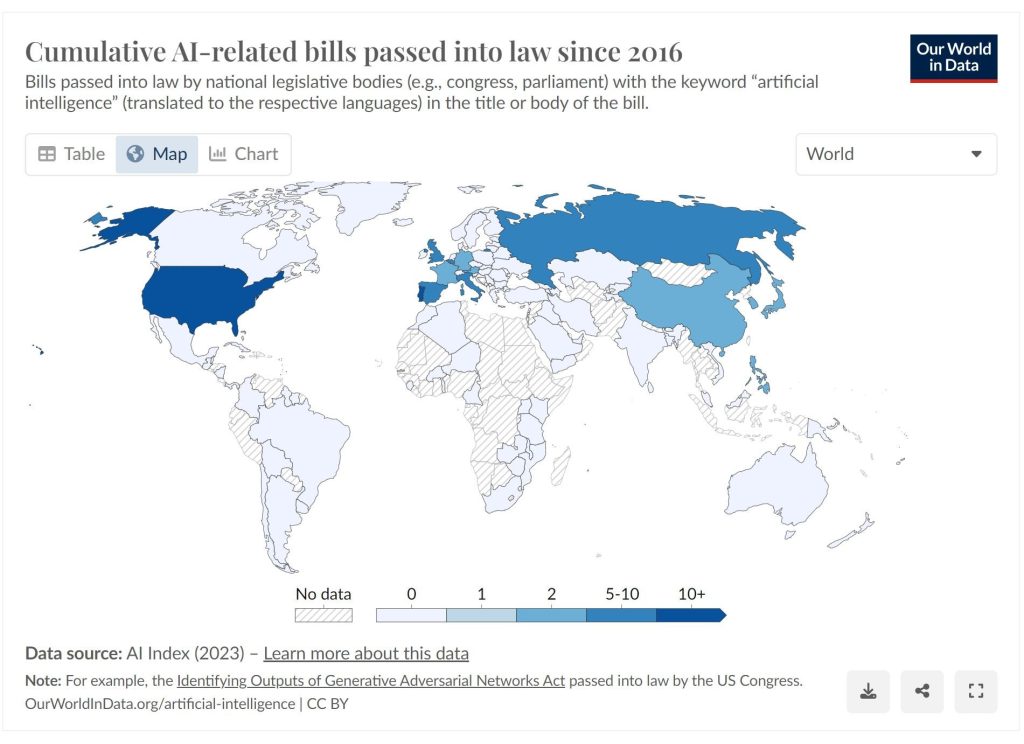

Vice did a story on the Siri-Human connection as well. Its become such an issue that its being addressed in the EU AI Act which bans the use of AI for manipulations. And I am very glad to know that because the potential for AI to manipulate humans becomes greater with each passing day. (Check out this demo of an AI reading human expressions in real time.) But, I digress. I’m getting too far into the weeds. What has any of this have to do with recruiting? Be patient. I’m getting to that. (Insert cryptic smile here.)

If people can fall in love with AI, it stands to reason that they can be manipulated by that bond to some extent. At the very least, could they be persuaded to buy things? Yes, they can. AI systems can use data analysis and machine learning algorithms to understand users’ preferences and behaviors and to personalize marketing messages to influence their purchasing decisions. Dr. Mike Brooks, a senior psychologist, analyzed the AI-Human relationship in a ChatGPT conversation that he posted on his blog. To quote…

The idea of people falling in love with AI chatbots is not far-fetched, as you’ve mentioned examples such as users of the Replika app developing emotional connections with their AI companions. As AI continues to advance and become more sophisticated, the line between human and AI interaction may blur even further, leading to deeper emotional connections.

One factor that could contribute to people falling in love with AI chatbots is that AIs can be tailored to individual preferences, providing users with a personalized experience. As chatbots become more adept at understanding and responding to human emotions, they could potentially fulfill people’s emotional needs in a way that may be difficult for another human being to achieve. This could make AI companions even more appealing.

Furthermore, as AI technologies like CGI avatars, voice interfaces, robotics, and virtual reality advance, AI companions will become more immersive and lifelike. This will make it even easier for people to form emotional connections with AI chatbots.

In addition to personalization, by analyzing users’ online behavior, AI systems can create targeted ads and recommendations that are more likely to appeal to users. There are many instances of this that I, for one, take for granted because they have become incorporated into daily life: Amazon, Netflix and Spotify all make recommendations based on a user’s online behavior. Facebook and Google, and so many others, analyze user’s behavior on their respective platforms to target them with relevant ads. So, consider the possibilities. AI can manipulate humans to the point of falling in love and persuade them to buy products or services based on their individual behaviors online. Is it inconceivable then that AI could become the ultimate recruiter? I think it is entirely possible but extremely unlikely. Why? At least two things would have to be in perfect alignment for each passive candidate on an applicant journey.

- Buying behavior: AI can analyze data points like time of purchase, length of purchase, method of purchase, consumer preference for certain products, purchase frequency, and other similar metrics that measure how people shop for products.

- Data privacy: Data privacy is a hot topic in the news, with frequent reports of hacked databases, stolen social media profile data, and not-so-secret government surveillance programs. As consumers have become more aware of their data rights, they have also become more mindful of the brands they buy from. A recent survey found that 90 percent of customers consider data security before spending on products or services offered by a company.

- Informed Consent: Obtain informed consent from individuals regarding data collection, tracking, and usage, clearly communicating the purpose and scope of tracking activities.

- Transparency: Clearly communicate to users how their online behavior is being tracked, the data collected, and how it will be used. Provide accessible information about the purpose, algorithms, and potential consequences of the system.

- Data Minimization: Collect only necessary and relevant data for recruitment purposes, avoiding unnecessary tracking or gathering of sensitive personal information.

- Purpose Limitation: Use the collected data solely for the intended purpose of recruitment and refrain from any undisclosed or secondary use without explicit consent.

- Bias Mitigation: Employ rigorous techniques to identify and mitigate biases in data collection, data processing, and algorithms to prevent unfair advantages or discrimination against certain individuals or groups.

- Third-Party Audits: Engage independent third parties to conduct regular audits of the AI system, including auditing against bias. These audits should evaluate the fairness, accuracy, and compliance of the system’s algorithms and decision-making processes.

- Fair Representation: Ensure the system is designed to provide fair representation and equal opportunities for all individuals, regardless of factors such as race, gender, age, or other protected characteristics.

- Explainability and Accountability: Strive for explainable AI by providing clear justifications for decisions made by the system, allowing individuals to understand and question the process. Establish mechanisms for accountability if any biases or unfair practices are identified.

- Regular Monitoring and Maintenance: Continuously monitor the system’s performance, evaluate its impact on candidates, and promptly address any identified issues, biases, or unintended consequences.

- Compliance with Legal and Regulatory Frameworks: Ensure adherence to relevant laws, regulations, and guidelines pertaining to data protection, privacy, employment, and non-discrimination, such as GDPR, EEOC guidelines, and local employment laws.

- User Empowerment and Control: Provide individuals with options to access, correct, and delete their data, as well as control the extent of tracking and participation in the recruitment process.

In a memo sent out Monday, the South Korea-based company notified staff of its decision to “temporarily restrict the use of generative AI,” Bloomberg reports. In addition to ChatGPT, that could include competitor chatbots like Google Bard and Microsoft Bing AI.

“Interest in generative AI platforms such as ChatGPT has been growing internally and externally,” Samsung says. “While this interest focuses on the usefulness and efficiency of these platforms, there are also growing concerns about security risks presented by generative AI.”

Samsung executives are concerned ChatGPT will store internal data and not give it the option to delete it before the AI chatbot spits it out in responses to users around the world, the memo says. Employees who continue to use ChatGPT will face “disciplinary action up to and including termination of employment,” Samsung says.

Bank of America Corp., Citigroup Inc., Deutsche Bank AG, Goldman Sachs Group Inc. and Wells Fargo & Co. are among lenders that have recently banned usage of the new tool, with Bank of America telling employees that ChatGPT and openAI are prohibited from business use, according to people with knowledge of the matter.

But wait, there’s more! By May 2023, Apple, Verizon, and Northrop Grumman were added to the list of companies limiting or outright banning ChatGpt for cybersecurity reasons. By August 2023, according to ZDNet, some 75% of businesses worldwide currently were implementing or considering plans to prohibit ChatGPT. To quote…

Some 75% of businesses worldwide currently are implementing or considering plans to prohibit ChatGPT and other generative AI applications in their workplace. Of these, 61% said such measures will be permanent or long-term, according to a BlackBerry study conducted in June and July this year. The survey polled 2,000 IT decision-makers in Australia, Japan, France, Germany, Canada, the Netherlands, US, and UK.

Respondents pointed to risks associated with data security, privacy, and brand reputation as reasons for the ban. Another 83% expressed concerns that unsecured applications were a security threat to their IT environment.

To support workers, the order develops principles and best practices to mitigate the harms and maximize the benefits AI creates for workers by addressing issues including job displacement, labor standards, and data collection.

“Automated Employment Decision Tools must be audited on annual basis to make sure the algorithms are fair to all. Employer has to publish a public statement summarizing the audit. Employers have to notify applicant and employees how the HR Tech is being used and its impact on hiring decisions.”

- There is a bill in Congress being debated called the “No Robot Bosses Act” which prohibits employers from using automated decision systems to make decisions related to hiring, firing, or promoting employees.

- Washington D.C. is considering the Stop Discrimination by Algorithms Act of 2023, which would require employers to disclose their use of AI in hiring and provide candidates with a copy of the algorithm used to evaluate them. I imagine a lot of HR Tech companies and watching that closely.

- Senate Bill (SB) 313 establishes the Office of Artificial Intelligence within the Department of Technology, granting the office authority to regulate the deployment of automated systems by a state agency and ensure compliance with state and federal laws and regulations.

- Assembly Bill (AB) 331 requires employers to, among other things, assess any discriminatory impact their AI tool has.

Most companies are not ready to deploy generative AI at scale because they lack strong data infrastructure or the controls needed to make sure the technology is used safely, according to the chief executive of the consultancy Accenture.

The most hyped technology of 2023 is still in an experimental phase at most companies and macroeconomic uncertainty is holding back IT spending generally, Julie Sweet told the Financial Times in an interview ahead of the company publishing quarterly results on Tuesday.

- The sheer amount of data needed, the online behavior of every passive candidate, would be difficult (if not impossible) to collect and I suspect, is unmanageable.

- It would require that every passive candidate in the world be unconcerned about data privacy.

- It would need lots of personal data, beyond ethical boundaries, for AI to adequately manipulate every passive candidate it wanted to recruit.

- Conversely, the data collected by AI would have to be limited in order to comply with ethical concerns and privacy laws.

- Every cybersecurity concern would have too be quelled to the level of worldwide acceptance.

- Government regulations are only going to become more pronounced as tech progresses and that will have an undeniable impact.

- And if all that is not enough, at the present time, companies do not have an internal framework strong enough to safely deploy it.